Modern web applications often need to export reports as PDF files. Instead of building PDF layouts manually, ActiveReportsJS by Mescius allows developers to design report templates using a JSON format, then render these templates inside Next.js using dynamic parameters and data sources.

1. Introduction

In this article, we will explore how to use ActiveReportsJS inside a Next.js project to generate PDF documents on the server side. The workflow is simple: prepare a JSON report template, pass data and parameters to it, and let ActiveReportsJS create the final PDF file.

This approach is useful for invoices, summaries, forms, and any business reports that need flexible formatting. The content below uses common technical vocabulary and is designed for developers of all levels.

2. How ActiveReportsJS Works in a Next.js Environment

ActiveReportsJS is a client-side and server-side reporting engine. When used with Next.js API routes, it can render PDF files without exposing sensitive logic to the browser. The typical flow includes:

- Loading a JSON report template (.rdl.json)

- Passing parameters and dynamic data

- Rendering the report into a PDF stream

- Returning the file to the user

Below is a simplified example of how the process looks.

2.1 JSON Template Structure

A basic ActiveReportsJS template includes layout, text boxes, and bindings. Templates are normally created using the ActiveReportsJS Designer tool.

{

"Name": "StudentReport",

"Type": "report",

"DataSources": [

{

"Name": "ReportDataSource",

"ConnectionProperties": {

"DataProvider": "JSON",

"ConnectString": "jsondata="

}

}

],

"DataSets": [

{

"Name": "ReportDataSet",

"Query": {

"DataSourceName": "ReportDataSource",

"CommandText": "$.value[*]"

},

"Fields": [

{

"Name": "qrcd",

"DataField": "qrcd",

"Type": "String"

},

{

"Name": "studentId",

"DataField": "studentId",

"Type": "String"

},

{

"Name": "ticketInfo",

"DataField": "ticketInfo",

"Type": "Object"

}

]

}

],

"Page": {

"PageWidth": "8.5in",

"PageHeight": "11in",

"Margins": {

"Top": "0.5in",

"Bottom": "0.5in",

"Left": "0.5in",

"Right": "0.5in"

}

},

"Body": {

"ReportItems": [

{

"Type": "textbox",

"Name": "QrcdValue",

"Value": "=Fields!qrcd.Value",

"Style": {

"FontFamily": "Noto Sans JP",

"FontSize": "10pt"

},

"Top": "0.9in",

"Left": "2in",

"Width": "5.5in",

"Height": "0.25in"

},

{

"Type": "textbox",

"Name": "TicketIdValue",

"Value": "=Fields!ticketInfo.Value.ticketId",

"Style": {

"FontFamily": "Noto Sans JP",

"FontSize": "10pt"

},

"Top": "2.3in",

"Left": "2in",

"Width": "5.5in",

"Height": "0.25in"

}

],

"Height": "9.8in"

}

}

2.2 Basic PDF Generation with Parameters

The simplest example: using parameters to inject values into the report template.

// pages/api/basic-report.ts

import { NextApiRequest, NextApiResponse } from 'next';

import {

outputPDFByARJ,

createParameter

} from '@/common/utils/sample-active-report-js';

export default async function handler(req: NextApiRequest, res: NextApiResponse) {

try {

const { title, author } = req.query;

// Create simple parameters

const parameters = [

createParameter('reportTitle', title || 'Default Report Title'),

createParameter('authorName', author || 'Unknown Author'),

createParameter('generationDate', new Date().toLocaleDateString('ja-JP'))

];

// Generate PDF using the utility function

await outputPDFByARJ({

jsonUrl: '/data/basic-report-template.json',

fileName: `basic-report-${Date.now()}.pdf`,

parameters

});

} catch (error) {

console.error('PDF Generation Error:', error);

res.status(500).json({ error: 'Failed to generate PDF' });

}

}

2.3 Working with Complex Data Structures

When dealing with complex business data containing nested objects, ActiveReportsJS requires careful handling. The template in our example expects data with nested structures like ticket information, status, and procedure details.

// pages/api/generate-student-report.ts

import { NextApiRequest, NextApiResponse } from 'next';

import {

outputPDFByARJ,

createParameter,

flattenNestedObjectToParameters

} from '@/common/utils/sample-active-report-js';

export default async function handler(req: NextApiRequest, res: NextApiResponse) {

try {

const { studentId, orgCode } = req.query;

// Sample complex data structure matching the report template

const reportData = {

value: [

{

qrcd: `QR${Date.now()}`,

studentId: studentId || "STU001",

orgCode: orgCode || "ORG001",

ticketInfo: {

ticketId: `TICKET${studentId || '001'}`,

seatNumber: "A-001",

facultyName: "Faculty of Arts",

departmentName: "Design",

categoryName: "General Admission",

area: "Main Campus"

},

numberInfo: {

number: "2024001",

appNumber: `APP${studentId || '001234'}`,

schoolName: "Sample High School"

},

resultInfo: {

status: "Passed",

comment: "Congratulations on your success!"

},

procedures: {

fee: "$3,000",

startDate: "2024-04-01",

endDate: "2024-04-30"

},

qrCodeReference: {

comment: "Scan for details",

destAddress: "contact@example.com",

destName: "Admission Office"

}

}

]

};

// Method 1: Using createParameter for simple values

const simpleParams = [

createParameter('reportTitle', 'Admission Certificate'),

createParameter('generatedDate', new Date().toISOString().split('T')[0])

];

// Method 2: Using flattenNestedObjectToParameters for complex objects

const nestedData = {

student: {

name: "John Doe",

studentId: "S2024001"

},

contact: {

email: "john.doe@example.com",

phone: "+1-555-0123"

}

};

const flattenedParams = flattenNestedObjectToParameters(nestedData);

// Combine all parameters

const allParameters = [...simpleParams, ...flattenedParams];

// Generate PDF using the utility function

await outputPDFByARJ({

jsonUrl: '/data/student-report-template.json', // Updated generic filename

fileName: `report-${Date.now()}.pdf`,

parameters: allParameters,

data: reportData

});

} catch (error) {

console.error('PDF Generation Error:', error);

res.status(500).json({ error: 'Failed to generate PDF' });

}

}

2.4 Client-Side Integration with React Components

Here's how to integrate PDF generation into your React components:

// components/ReportGenerator.tsx

import { useState } from 'react';

import {

outputPDFByARJ,

createParameter,

flattenNestedObjectToParameters

} from '@/common/utils/sample-active-report-js';

// Define interface for data structure

interface ReportData {

qrcd: string;

studentId: string;

orgCode: string;

ticketInfo: {

ticketId: string;

seatNumber: string;

facultyName: string;

departmentName: string;

categoryName: string;

area: string;

};

numberInfo: {

number: string;

appNumber: string;

schoolName: string;

};

resultInfo: {

status: string;

comment: string;

};

procedures: {

fee: string;

startDate: string;

endDate: string;

};

qrCodeReference: {

comment: string;

destAddress: string;

destName: string;

};

}

export const ReportGenerator = () => {

const [isGenerating, setIsGenerating] = useState(false);

const [studentId, setStudentId] = useState('');

const [orgCode, setOrgCode] = useState('');

const generateReport = async () => {

if (!studentId || !orgCode) {

alert('Please enter Student ID and Org Code');

return;

}

setIsGenerating(true);

try {

// Prepare complex nested data

const data: ReportData = {

qrcd: `QR${Date.now()}`,

studentId,

orgCode,

ticketInfo: {

ticketId: `TICKET${studentId}`,

seatNumber: 'A-001',

facultyName: 'Faculty of Arts',

departmentName: 'Design',

categoryName: 'General Admission',

area: 'Main Campus'

},

numberInfo: {

number: studentId,

appNumber: `APP${studentId}`,

schoolName: 'Sample High School'

},

resultInfo: {

status: 'Passed',

comment: 'Congratulations on your success.'

},

procedures: {

fee: '$3,000',

startDate: '2024-04-01',

endDate: '2024-04-30'

},

qrCodeReference: {

comment: 'Access Link',

destAddress: 'contact@example.com',

destName: 'Office'

}

};

// Create parameters using utility functions

const parameters = [

createParameter('reportTitle', 'Admission Certificate'),

createParameter('generationTime', new Date().toLocaleString('en-US')),

...flattenNestedObjectToParameters({

metadata: {

version: '1.0',

generatedBy: 'Report System'

}

})

];

// Generate PDF with complex data

await outputPDFByARJ({

jsonUrl: '/data/student-report-template.json',

fileName: `admission-certificate-${studentId}.pdf`,

parameters,

data: { value: [data] } // Wrap in { value: [...] } as expected by template

});

alert('PDF generated successfully!');

} catch (error) {

console.error('Generation failed:', error);

alert('Failed to generate PDF. Please try again.');

} finally {

setIsGenerating(false);

}

};

return { generateReport };

}

2.5 Understanding the outputPDFByARJ Function

Before diving into complex examples, let's understand the outputPDFByARJ function - this is the main utility function for generating PDFs from JSON templates:

/**

* Generates and downloads a PDF file from a report definition JSON (RDL).

* This function fetches the report layout, applies specified parameters, runs the report,

* and then exports the result as a PDF, triggering a download in the browser.

*/

export const outputPDFByARJ = async ({

jsonUrl,

fileName,

parameters,

password,

data,

}: OutputPdfParams): Promise => {

// Check if running in browser environment

if (typeof window === 'undefined') {

console.log('PDF Output Error: Not running in browser environment');

return;

}

// Helper: Temporarily neutralize i18n mutating methods to avoid vendor side-effects

const runWithI18nPatched = async (work: () => Promise) => {

// Save original methods

const i18nAny = i18n as unknown as Record<string, unknown>;

const original = {

use: i18nAny.use,

init: i18nAny.init,

changeLanguage: i18nAny.changeLanguage,

addResourceBundle: i18nAny.addResourceBundle,

addResources: i18nAny.addResources,

addResource: i18nAny.addResource,

loadLanguages: i18nAny.loadLanguages,

loadNamespaces: i18nAny.loadNamespaces,

};

// Patch to no-ops (non-mutating)

i18nAny.use = () => i18n;

i18nAny.init = () => i18n;

i18nAny.changeLanguage = () => i18n.language;

i18nAny.addResourceBundle = () => undefined;

i18nAny.addResources = () => undefined;

i18nAny.addResource = () => undefined;

i18nAny.loadLanguages = () => undefined;

i18nAny.loadNamespaces = () => undefined;

try {

await work();

} finally {

// Restore originals

i18nAny.use = original.use;

i18nAny.init = original.init;

i18nAny.changeLanguage = original.changeLanguage;

i18nAny.addResourceBundle = original.addResourceBundle;

i18nAny.addResources = original.addResources;

i18nAny.addResource = original.addResource;

i18nAny.loadLanguages = original.loadLanguages;

i18nAny.loadNamespaces = original.loadNamespaces;

}

};

await runWithI18nPatched(async () => {

// Dynamically import ActiveReports to avoid SSR issues

const { Core, PdfExport } = await import(

'./wrappers/activereports-wrapper'

);

// Fetch the report layout from the provided URL

const response = await fetch(jsonUrl);

const jsonData = await response.json();

// Modify ConnectString with JSON data

jsonData.DataSources[0].ConnectionProperties.ConnectString = `jsondata=${JSON.stringify(data)}`;

const fontsToRegister = [

{

name: 'IPA EXG',

source: '/fonts/ipaexg.ttf',

},

{

name: 'IPA EXM',

source: '/fonts/ipaexm.ttf',

},

];

await Core.FontStore.registerFonts(...fontsToRegister);

// Load the report definition

const report = new Core.PageReport();

await report.load(jsonData);

if (!data) {

// Normalize and apply parameters to the report

const normalizedParameters = parameters.map((param) => ({

...param,

Value: normalizeParameterValue(param.Value),

}));

// Apply normalized parameters to the report

await report.reportParameters.applySteps(normalizedParameters as any);

}

// Run the report to generate the document

const pageDocument = await report.run();

// Export the document to a PDF blob and initiate download

const pdfSettings = password

? { security: { userPassword: password } }

: undefined;

const pdfBlob = await PdfExport.exportDocument(pageDocument, pdfSettings);

pdfBlob.download(fileName);

});

};

Parameters of the outputPDFByARJ function:

jsonUrl: Path to the JSON template filefileName: Output PDF file nameparameters: Array of parameters to pass to the reportpassword (optional): Password to protect the PDFdata: JSON data to bind to the report template

The function will:

- Fetch the JSON template from the URL

- Apply parameters and data

- Render the report into a PDF

- Trigger download in the browser

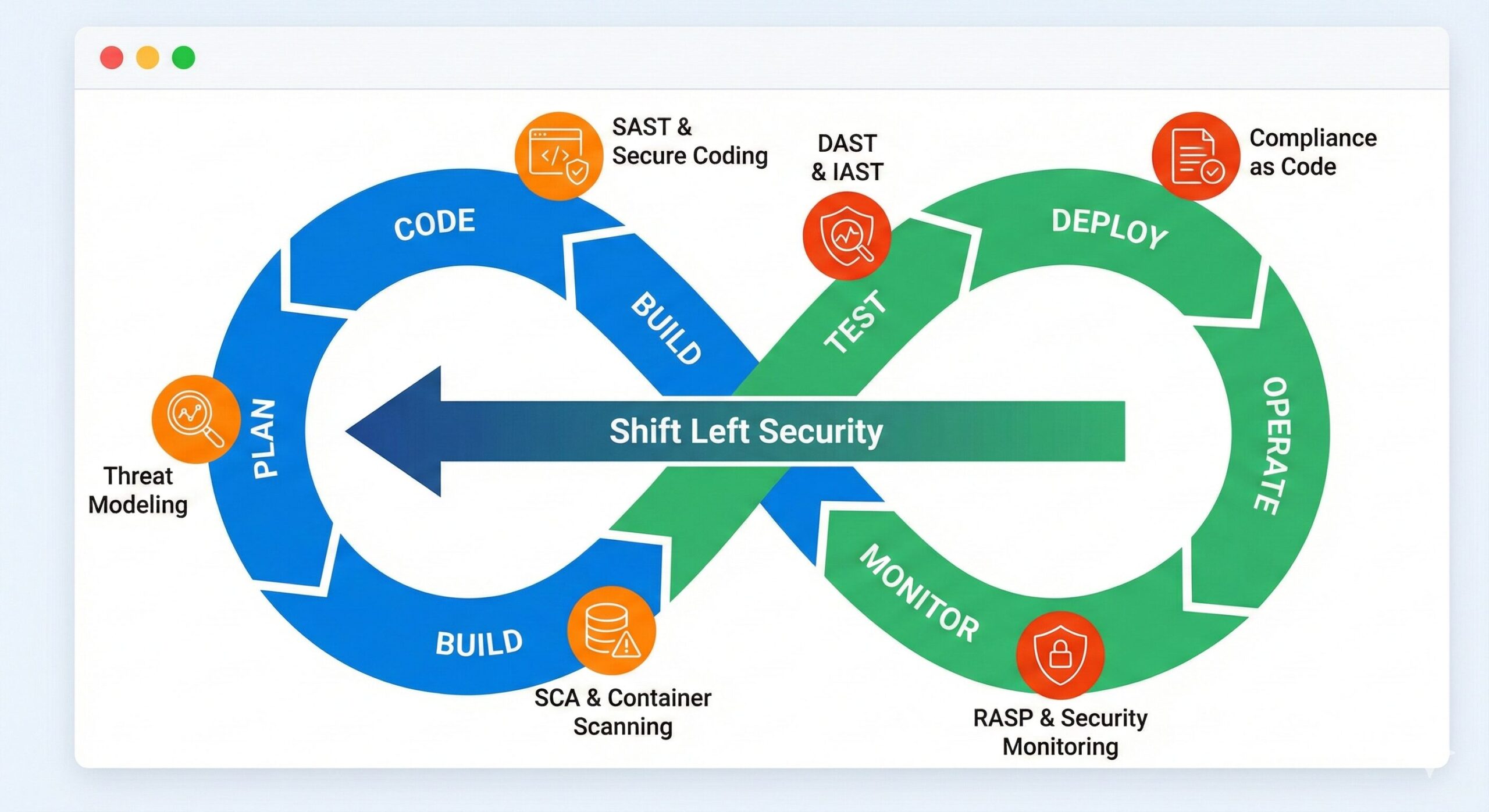

3. Best Practices When Using ActiveReportsJS with Next.js

- Store report templates in a secure directory of your project.

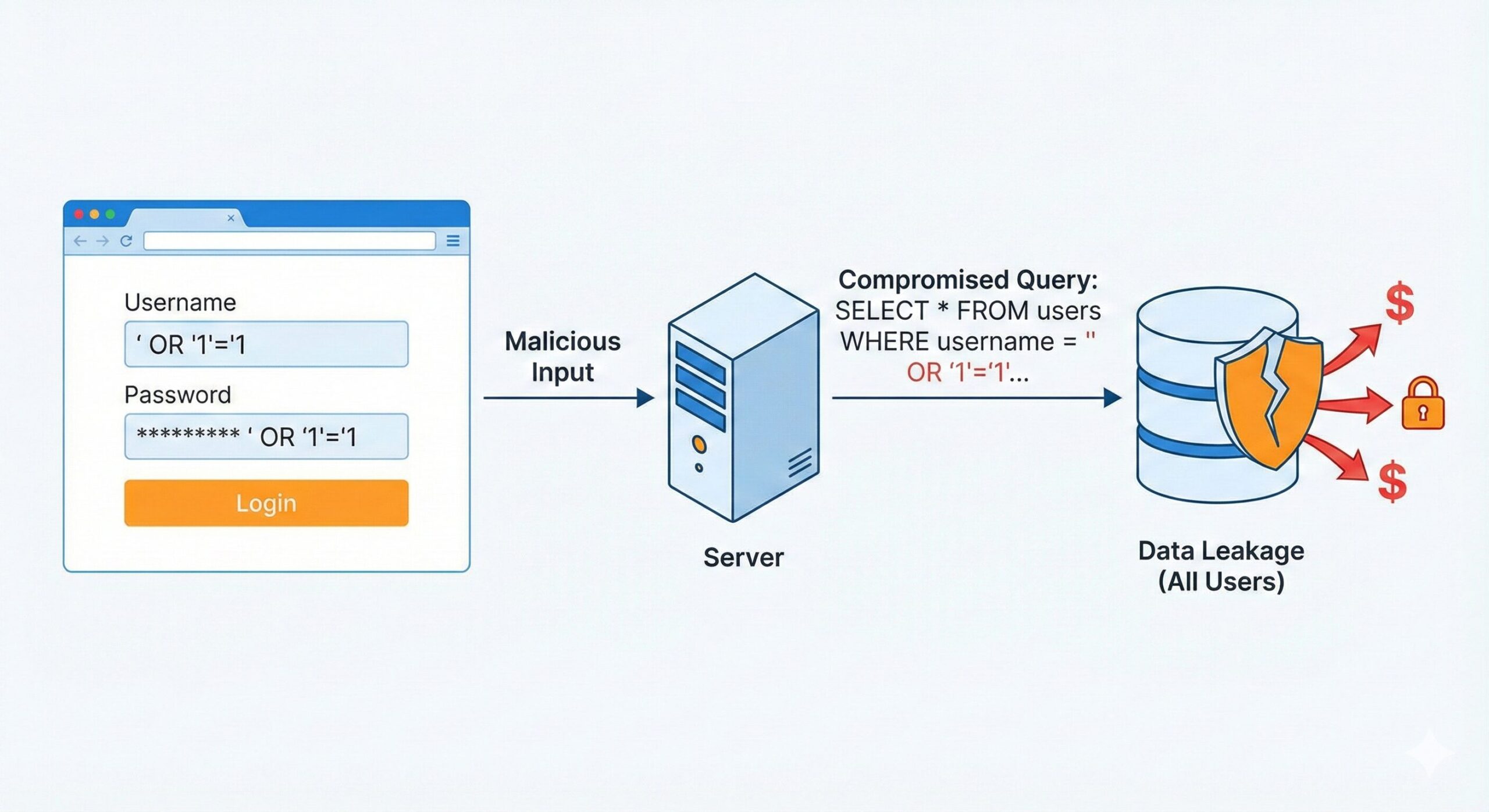

- Validate all parameters to avoid unwanted data injection.

- Use API routes to protect server-side rendering logic.

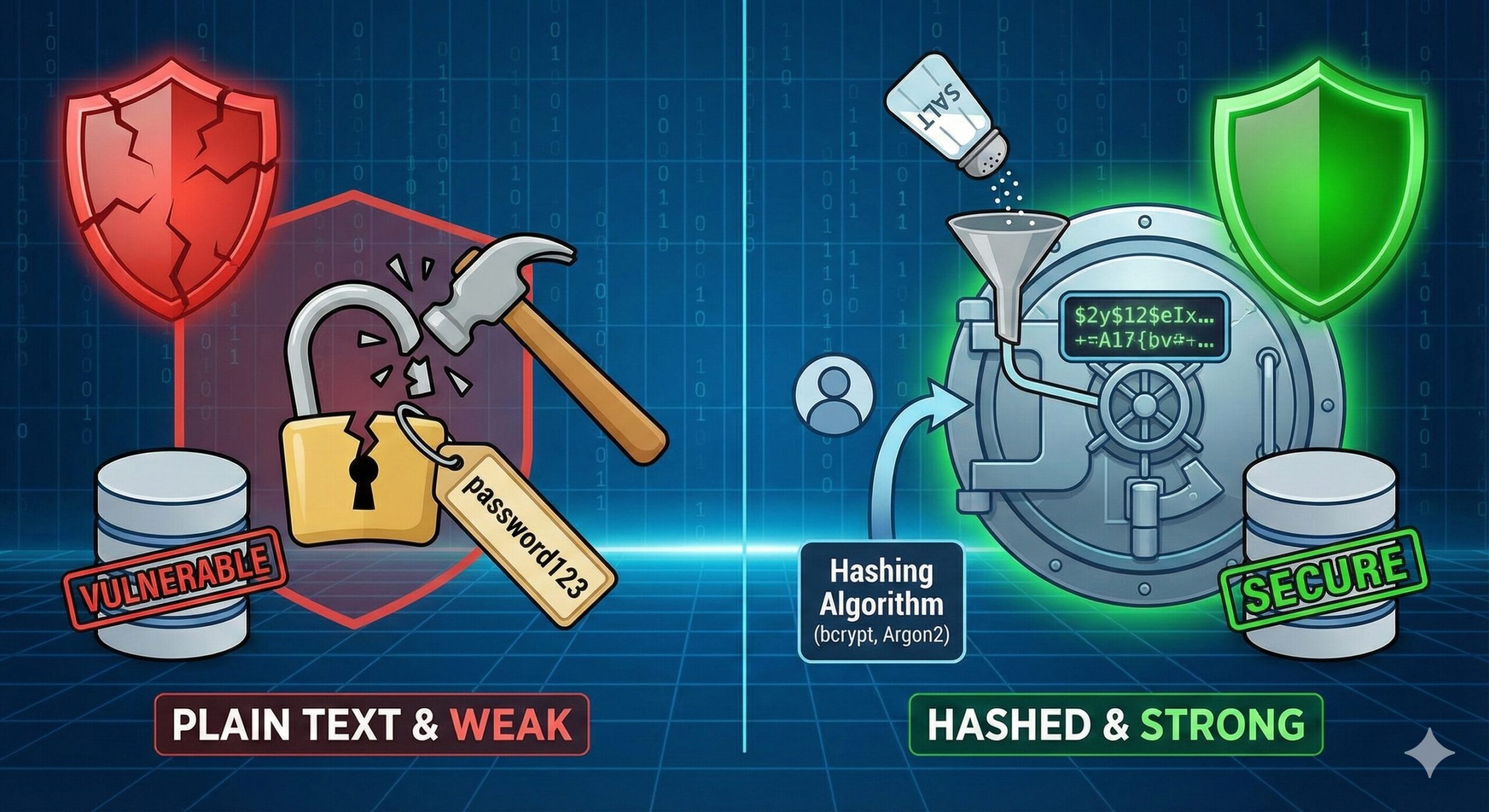

- Do not expose confidential structures from real projects.

- Always review generated code before deployment.

4. Additional Considerations

- Using ActiveReportsJS together with i18next / other i18n libraries:

If your Next.js project already uses i18next (or similar JavaScript localization / translation libraries), be aware that there can be potential conflicts — especially if your report templates or the rendering logic depend on global locale settings, overridden prototypes, or modifications to built‑in objects.

To avoid unexpected behavior (e.g. locale/format overrides, translation JSON interfering with report JSON, or i18next initialization affecting global state), you should isolate the report‑rendering context from the rest of your app. For example: load and render the JSON template without i18next’s context, or ensure i18next is not active / initialized when generating PDF on the server.

- Licensing: Free for development / evaluation — but production requires a valid license key:

ActiveReportsJS provides a “trial / evaluation mode” which allows you to develop locally without a license key. However, in this mode, exported reports will contain a watermark and the standalone designer has a limited evaluation period. For more details, see the official licensing documentation.

When you deploy to staging or production (or distribute your application), you need to purchase the appropriate ActiveReportsJS license (e.g., a distribution / deployment license), generate a distribution key, and configure your application to set that license key (typically via Core.setLicenseKey(...) or similar). This will remove the watermark and ensure compliance with licensing terms.

5. Conclusion

Using ActiveReportsJS with Next.js provides a clean and scalable way to generate PDF files from JSON templates. By combining parameters, dynamic data, and predefined layouts, developers can create powerful report systems without building UI elements manually.

If you are exploring modern reporting solutions and want to apply them in real-world applications, our company encourages continuous learning and high-quality engineering. For more information about our technology expertise or to discuss potential collaboration, please reach out through our official contact channels.

Whether you need scalable software solutions, expert IT outsourcing, or a long-term development partner, ISB Vietnam is here to deliver. Let’s build something great together—reach out to us today. Or click here to explore more ISB Vietnam's case studies.

Reference Documentation